AI in Smart Buildings #5 — Prototyping & Assembly

Oct 16, 2022 | Jagannath Rajagopal | 8 min read

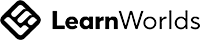

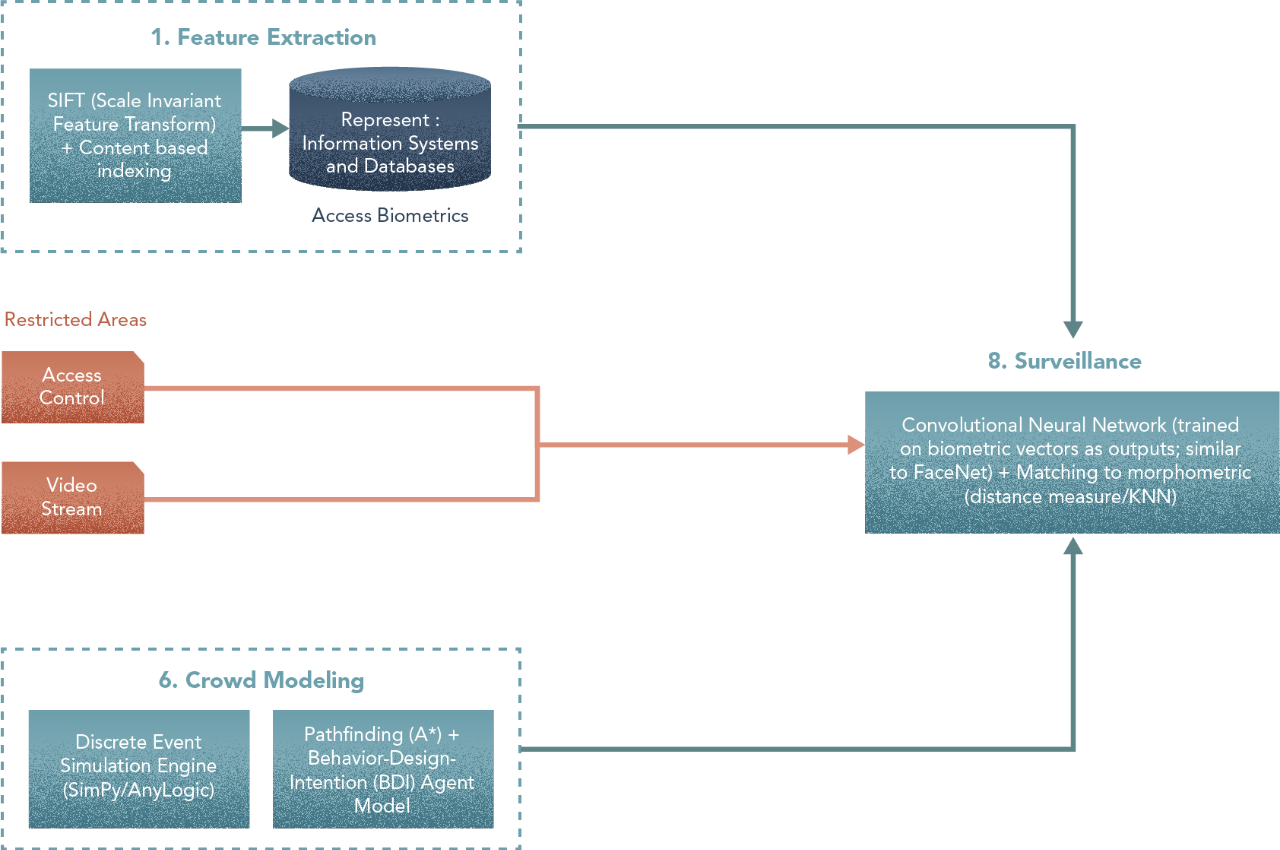

When we created Scenarios, we focused on translating methods into techniques. If the method was Computer Vision (CV), we chose a CV technique like the Scale Invariant Feature Transform (SIFT).

In the resulting technique diagram, steps 1–4 are independent of outcomes of other steps, while 5–9 are not. At this stage, all steps are built regardless. The way we test independent steps will however vary from the same for the dependent steps.

Let’s start. Treat the above as the initial version of the architecture, as the input to Prototyping and Assembly. Each step is described a little further below. The idea is to do this iteratively. Build your initial version first, along with the requisite metrics. This would be a baseline for further experimentation.

As before, we aim to be illustrative and pedagogical is showcasing how Hero Methods may be applied to complex problems in AI. Actual problems may involve less or more steps based on the scenario.

- - -

Prototyping

During prototyping, we’ll test independent steps not just to verify the build is bug-free, but also to verify modelling and use case objectives. For dependent steps, we’ll only verify the build is bug-free. Testing them to verify modelling and use-case objectives will be accomplished as part of Assembly.

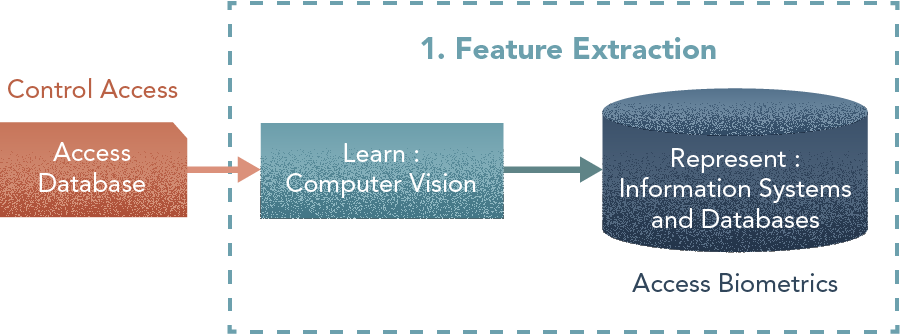

1. Feature Extraction

Steps

- Mark key points with SIFT

- Obtain morphometric vectors

- Perform Content-based Indexing

- Load into Database

Metrics & Indicators

Sensitivity and specificity of the validation process.

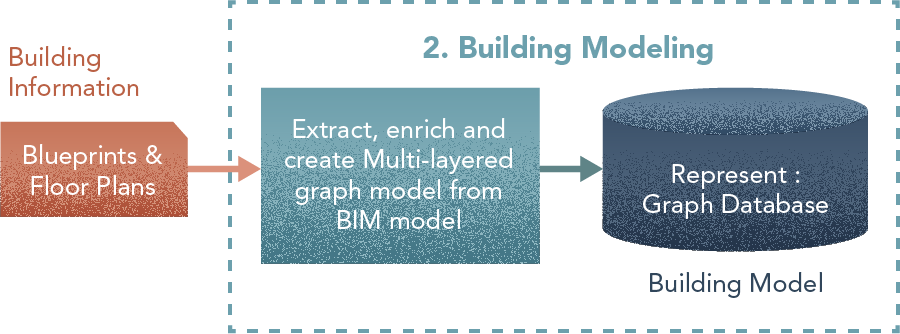

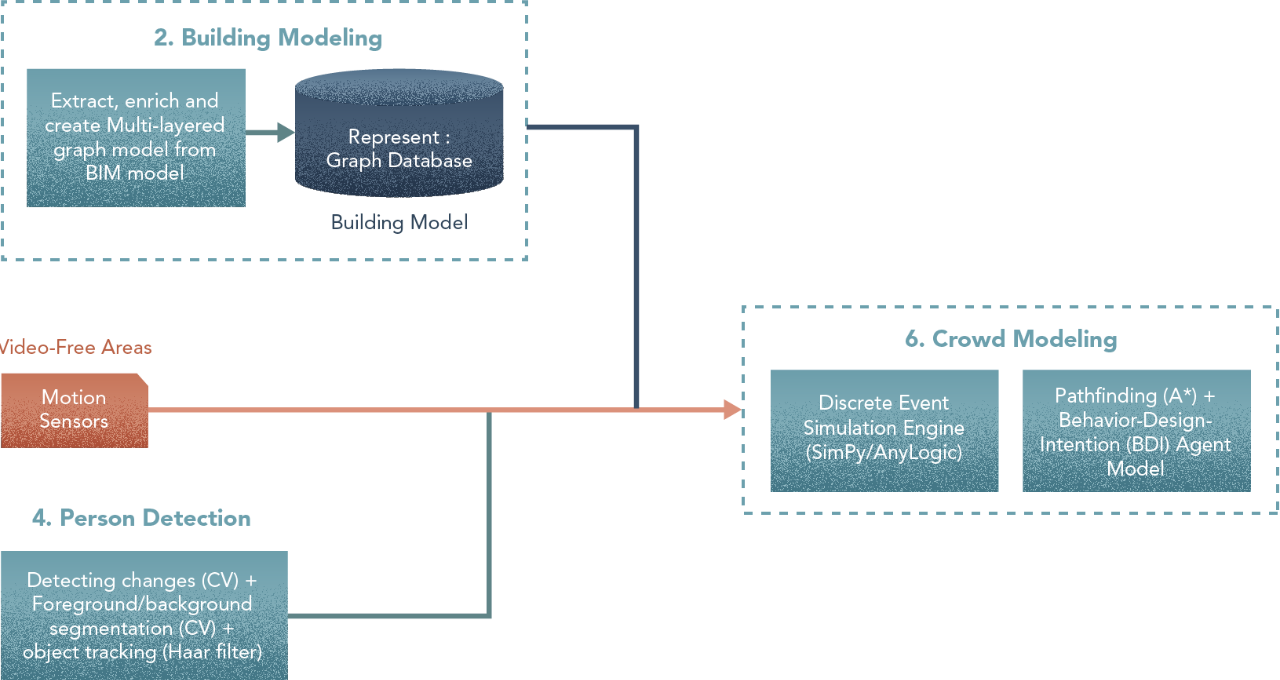

2. Building Model

Steps

- Extract spaces/locations from the BIM model — rooms, open areas etc.

- Model into a graph with nodes = spaces and edges = connections (doors, stairs, etc).

- Enrich nodes (Data on area, security level, space type, etc) and the edges (Data on time to traverse, bandwidth, etc).

Metrics & Indicators

Graph and network metrics (e.g., Centrality, Degree, Motif, or Clustering).

I’ve created a LOT of resources on this topic. Here’s my course on Design Thinking for Hero Methods. Here’s my YouTube channel with previews of course videos. Here’s my website; navigate through the courses to find free previews & pdfs.

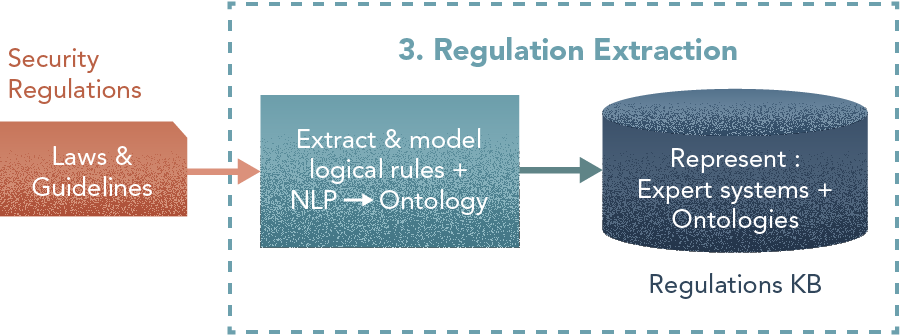

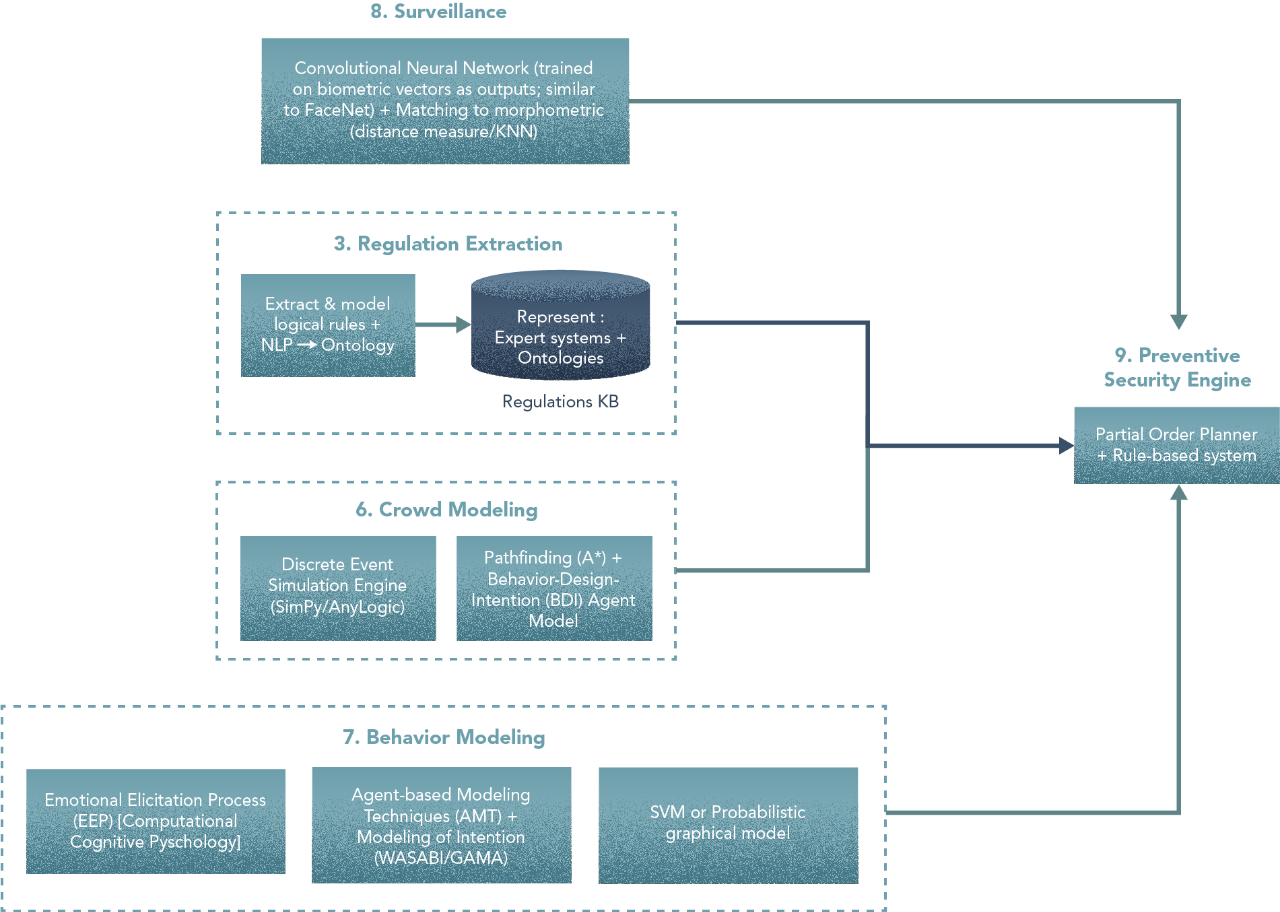

3. Regulation Extraction

Steps

- Define ontology terms and relationships from NLP tokenization.

- Expert-driven definition of rules (NLP to help in extracting sentence structures).

Metrics & Indicators

- Knowledge base & ontologies metrics (axioms, logical axioms, number of classes or properties).

- Subjective measurements: Extensibility, coverage, …

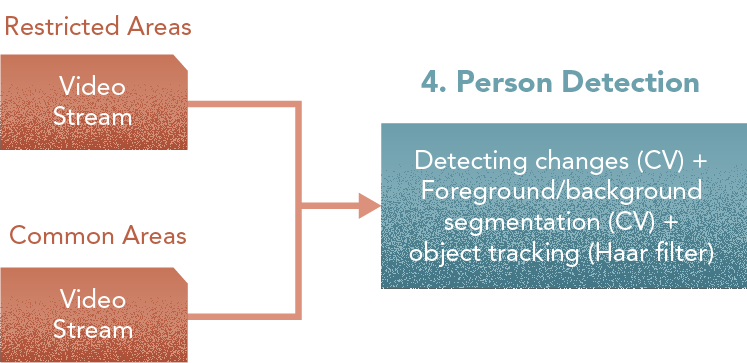

4. Person Detection

Steps

- Set up frame selection in video for changes and movement.

- Segment images to identify objects.

- Track object movement.

Metrics & Indicators

- Accuracy metrics (specificity and sensitivity)

- Multi-label metrics (exact match, 0/1 loss, hamming loss, F1-score, …)

- - -

Assembly

Steps 5 — 9 of the technique diagram are dependent on outputs from prior steps. All steps are built during Prototyping but there is no way to test them since outcomes from independent steps are not available yet. Once those become available, the task of putting more than one piece together can commence.

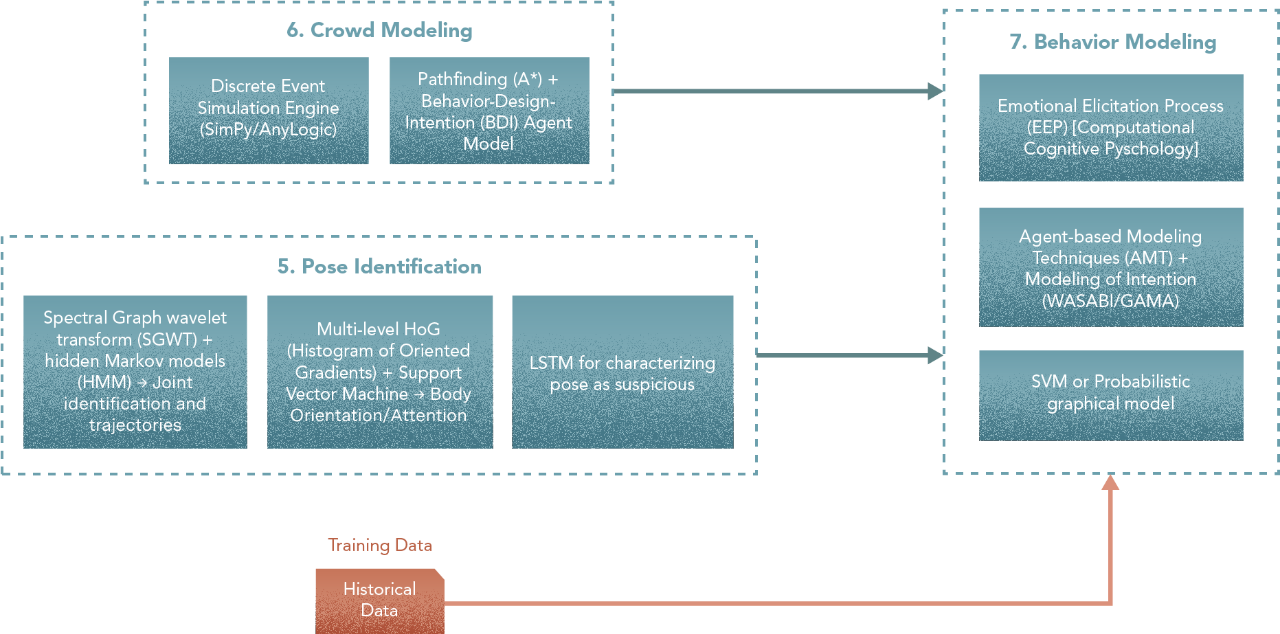

5. Pose Identification

Steps

- Detect reference points (joints), and track position and movement.

- Model skeleton (as connection between keypoints/joints) based on relative distances

- Characterizing pose (based on the skeleton position)

Metrics & Indicators

- Accuracy per body part (head, wirst, elbows, shoulders, hip, …)

- Distance from the actual pose

- Occlusion prediction metrics

6. Crowd Modelling

Steps

- Define agent goals using different profiles (a buyer, a security guard, store clerks, …).

- Create navigation maps (in combination with pathfinding algorithms) for agent movement between locations.

- Model as a discrete event simulation and make agents move within the building.

Metrics & Indicators

- Population-based measurements (queue sizes, waiting time, density metrics, …).

- Scenario analysis (subjective evaluation)

7. Behaviour Modelling

Steps

- Model a perception system (as part of the agent design) for EEP.

- Model external events (a fire, an alarm, etc) and set parameters, area of effects, and implications to the perception system of the EEP model.

- Use the crowd model with these perception/event inputs to construct behaviour simulations for agents.

- Using historical data for the pose model to tag effective behaviour.

- Using a machine learning algorithm to analyze unexpected behaviour (observed vs. simulated).

Metrics & Indicators

Mood and emotion transition maps (PAD vectors)

8. Surveillance

Steps

- Using access control systems to tag persons navigating through the restricted areas.

- Segment video sequence for persons in the scene and try to match them with the tagged access events.

- For the persons in the scene segment the face of the picture and apply transformations for a correcting perspective and minimize occlusion.

- For the transformed image produce morphometric vectors.and query them in the database (with some degree of matching).

- Integrate, tag information and biometrics database outputs for more accurate identification.

Metrics & Indicators

- Accuracy

- Scenario analysis

9. Preventive Security Engine

Steps

- In the case of access violation threats, set up the system to activate the surveillance response.

- For incidents flagged based on input from building sensors [ex., fire], set up the engine to apply the appropriate response plan for these situations.

Metrics & Indicators

- Scenario analysis

- Comparison of action plans by the planner (efficiency, redundancy, …)

- - -

Experimentation

So what do you do if the initial approach does not meet expectations? Because of the granularity with which steps are defined, prototyped and assembled, you’ll be able to easily be able to look and see where the problem lies, or in which step(s). In other words, our architecture is not one big black box, though it may use some smaller ones in certain places.

If a particular step is not working as expected, you need alternates — at different levels. If a particular sub-step is not working right, swap it out and put in a different technique. If the issue is with an independent step overall, then reconsider its setup. The further downstream the issue lies, the harder it is to isolate and fix, especially if the problem is one that cascades from earlier steps in the architecture. If so, you may need to reconsider the overall approach.

In Ideation, options are available for most methods. Likewise, in Scenarios, options are available for most techniques.

For Smart Buildings, there are different ways to approach the overall architecture depending on whether part of the tasks can be implemented into a single algorithm or process.

Combine Feature Extraction (1) + Facial Recognition (8)

Depending on the algorithm and the actual implementation these two steps may be performed as a single process that classifies the images as a result of the Facial Recognition phase. This alternative deeply depends on the approach taken for the solution of the problem. A combined implementation of these two steps means that it has to deal with both frontal images with good illumination (for the Access Control) as well as with frames in a video sequence from a CCTV camera in a corridor, for instance. The task in both cases is to extract characteristics (features) that can be used to query the Biometrics DB either to open a security door (Access Control) or to trigger an alarm (Surveillance).

All the comments regarding these two steps, which we have mentioned before, may be considered in a tightly coupled implementation of these two tasks. To do this, the model will need to consider multiple types of input — both in training & in live operation, including access biometrics, input from security/access control devices as well as CCTV. The model architecture will need to be developed so that it integrates these multiple inputs and produces a single output — whether there is a potential security breach/access violation. This can be challenging since machine learning models like neural networks do not like it when different modes of input are combined, especially static and time series.

Combine Person Detection (4) + Pose Identification (5)

Similarly we may choose to detect persons and classify poses in a single algorithm. In this case the outcome shall be the estimated pose as well as the persons detected (independent of the pose).

Combine Building Model (2) + Crowd Model (2)

Some crowd modelling approaches do not need the building model processed by step #2 and operate directly on a volumetric representation of a building. Usually, in these cases, the crowd model is very simple (mostly implementing a random walk or hard-coded agent behaviour). It is possible to include more advanced crowd modelling here but if so it would perform its own interpretation of the building and embed the building model in the simulation process. A more advanced model may also include a solver for pathfinding and planning agents (for an agent-based model).

Combine Building Model (2) + Preventive Security Engine (9)

In this case, the building model might be as simple as the list of spaces and the security engine works more at a level not related to the physical distribution of the spaces. These engine’s implementations are mostly a regulation checker or assisting in the carrying out the security protocols

Combine 2, 6 & 9

A combination of the previous two alternatives, but also in cases where there is a tight coupling between the simulation of crowds and the security engine. For use cases where security considerations are mostly addressing emergency control & management, the engine considers different situations in which the crowds may react differently or operate under different parameters. In these cases there is feedback between the emergency control engine and the crowd behaviour models. Also, 6 & 9 require pathfinding. Even if we don’t combine them, we may choose to either pre-process pathfinding as part of 2, or do them in 6/9.

- - -

At Kado, combining methods into an architecture to solve complex problems is what we do. Here’s the why — cuz this is compelling!

This is how we see the world

Machine Learning is King, but of narrow territory. Hero Methods do things that ML cannot. Taken together, not only do they help solve complex problems, they also lay the doorway to AI.

Get in touch

-

Milton, ON L9T 6T2, Canada

-

help@kado.ai

-

+1 416 619 0517

Copyright © 2026

Super!

An email is on it's way.

Super!

An email is on it's way.