A brief, partly-known history of Hero Methods: Why I built Kado

Oct 14, 2022 | Jagannath Rajagopal | 8 min read

You know, when I first wrote this down, I was told it was too long. Apparently, one needs a sound-bite these days. Something brief, catchy, short, simple. Problem is — with what we do, nothing is simple. It can’t be boiled and reduced down to something syrupy without losing vitality. We deal with complexity. This article in turn — about why I built Kado and what it’s about — is a long one. It’s over 1,700 words. It will take under 10 minutes of your time. But I promise you, it’s worth it. You’ll gain something. So here goes.

- - -

The perceptron was first invented in 1943. But let’s not start there. This story begins in winter 1998, when I started grad school in Operations Research (OR).

Atlanta in 1998

Back then, there was no machine learning. There was however, regression.

Further, there was heavy focus on Optimization and its forms. Mentions of Combinatorial Optimization would send cold shivers down our collective spines, testament to how tough those problems are to solve. It’s how the world would be now for neural nets, if there was no Open Source, PyTorch/Tensorflow or GPUs.

There was also a heavy focus on application. At the time, Supply Chain Management was a hot topic in Enterprise Software; this lent itself well to our time in grad school due to its overlaps with OR — Transportation Scheduling, Production Scheduling, Inventory Policy, Network & Location management. One of our profs was founder at a successful start-up whose app let corporations strategically optimize their supply chain networks.

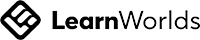

Another major method discussed everywhere was Simulation. It was treated as a core topic in Operations Research and much of our time was spent on this. Wherever there were queues — or flows of things and people, there was Monte Carlo simulation. Production lines, Supply Chains, road traffic, customer lines, baggage handling.

My internship was with a cohort in a company that ran Simulations at airport and airline ops: baggage systems, terminals, runways traffic, passenger flow, subways, harbours etc. Back at school, there was a lot of work conducted at labs in conjunction with the Department of Transportation to model and manage traffic flows on roads and highways across the country.

Regression was one topic in one class (called Statistical Methods). During my internship, a roommate — also a grad student in OR — briefly remarked about neural networks and how hard they were.

AGI is real AI….

Turning the page, in their paradigmatic textbook, Russell and Norvig focus on three families of methods: Problem Solving, Knowledge Representation, & Learning. They also discuss methods like perception but today the best models today are learning-based.

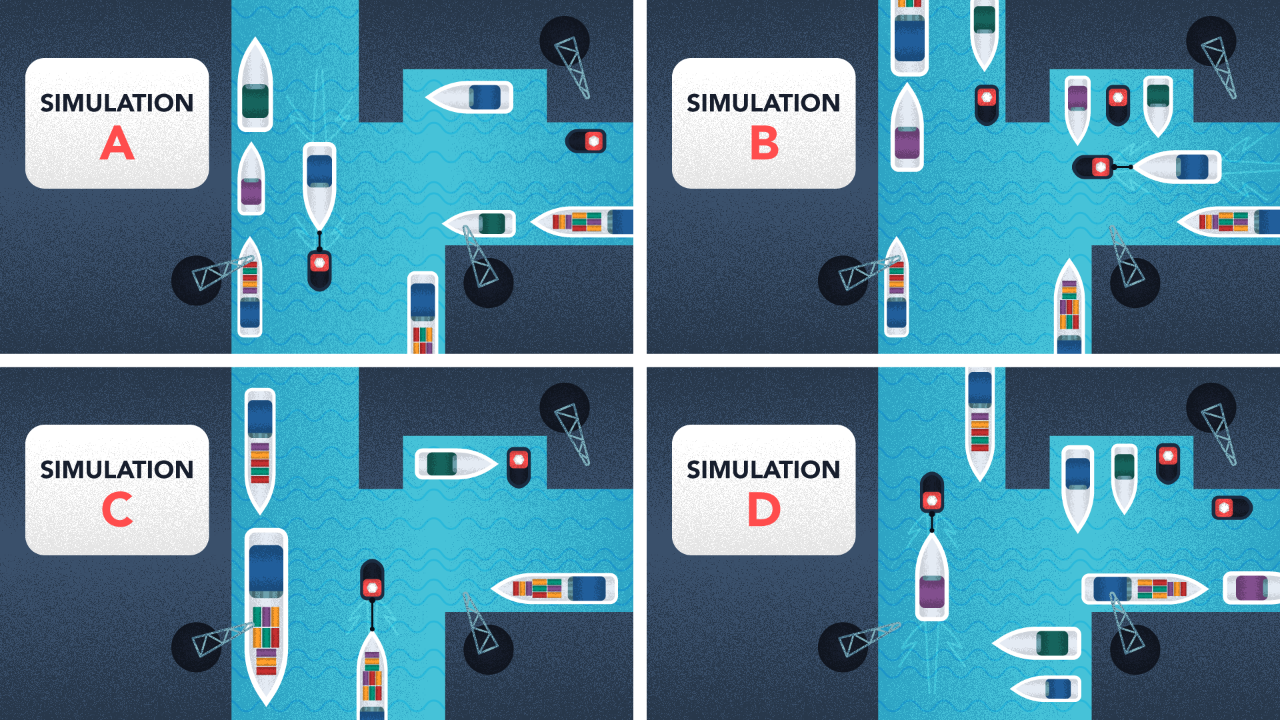

Search, in all forms — classical or otherwise — underpins much of research work today. If you need to find a needle in a multi-dimensional haystack, search is the one that comes to mind. Taken together with Logical Reasoning/Programming, Probabilistic Reasoning & Planning, they can loosely be placed under the umbrella of Problem Solving.

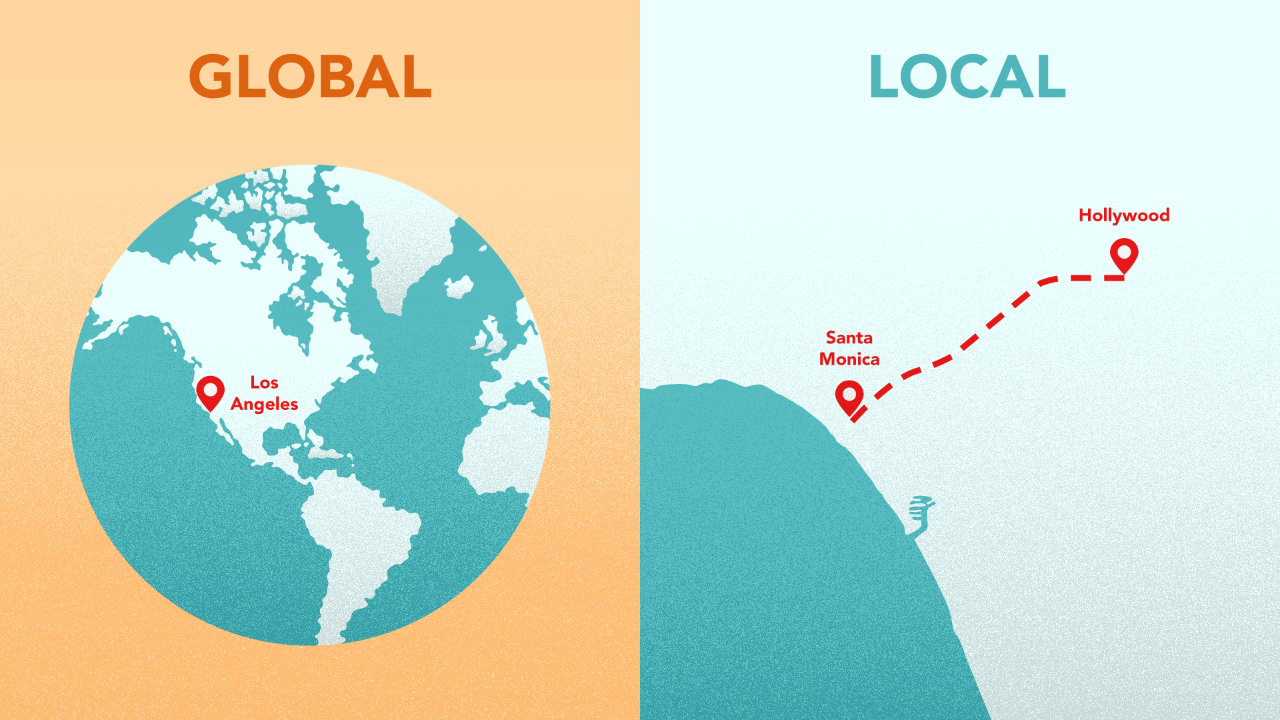

Knowledge Representation in its myriad forms, including graphs and ontologies are everywhere. In the last decade and a half, graphs in particular have exploded in application. Ontologies have always been around; they were the basis of expert systems aka GOFAI [Good Old-Fashioned AI]. With the Semantic Web, Grakn (now TypeDB), Cyc and similar efforts, these are making a comeback.

The onset of the last AI winter happened when the connectionist school hit a snag with neural networks. The 3 papers in 2006/7 by Hinton, LeCun and Bengio are important partly because, in addition to ushering in the ML renaissance, they gave the field of AGI the next spring. Unlike what Machine Learning is referred to in dumb marketing, I am referring to the kind of real AI which mostly resides in protected, black sites and labs around the world.

…while Machine Learning isn’t

Speaking of dumb marketing, there is no denying Machine Learning is king today in this domain. Peer under the covers of learning methods, you’ll find an optimization method. Backprop — the most common training method, and evolution — the second most, both rely on optimization to surface suitable model parameters.

However, Machine Learning has weaknesses. What if you need your models to learn meaning? What if you need to teach the machine something formal? What if the problem is fundamentally characterized by low data volumes? What if you need to represent facts? What if you will always encounter patterns that don’t repeat? What if you need your model to imagine scenarios? These lie outside its capability. Expecting Learning methods to do something they cannot, makes no sense. We need to go broader, not deeper, in a search to resolve these.

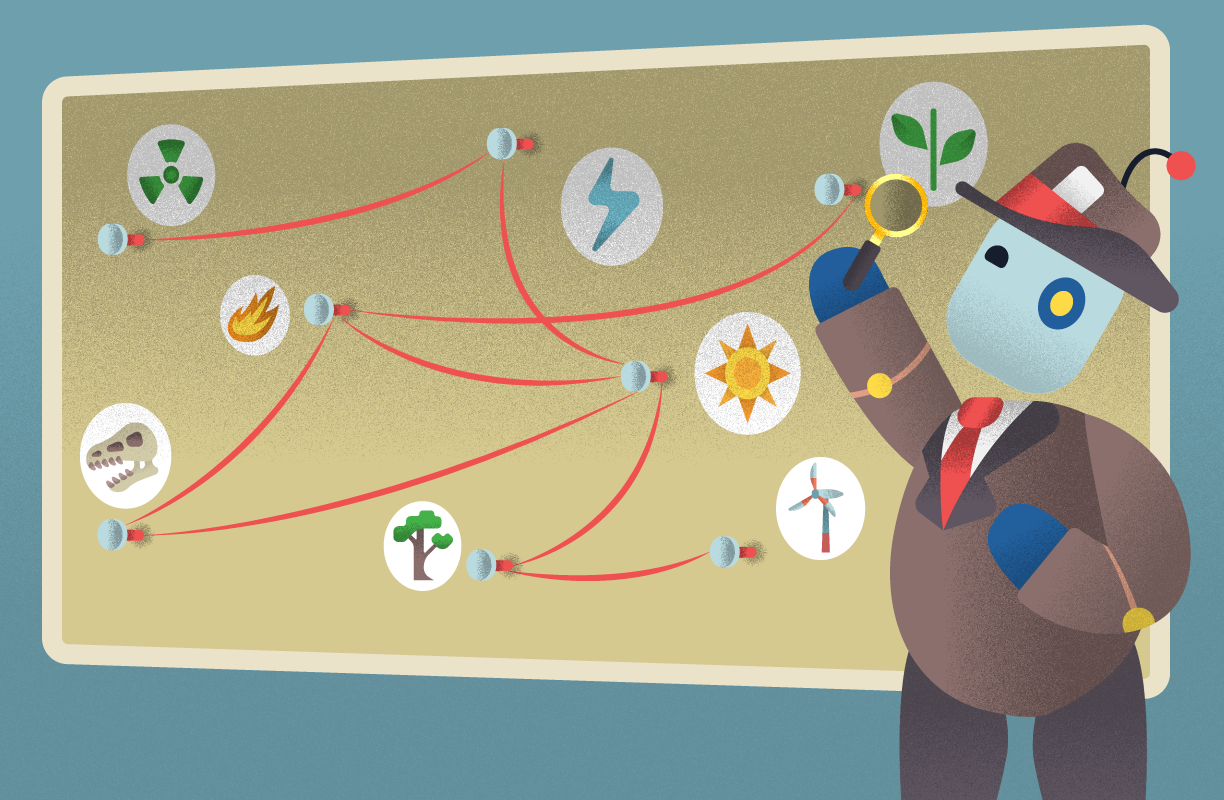

Fortunately, Optimization, Simulation, Knowledge Representation & Problem solving — do things that ML cannot (or does poorly). Taken together in an architecture, not only do they help solve complex problems, they also lay the doorway to AI. It’s not only ML; it’s any combination of the above, as long as it solves the end problem.

At Kado, this is how we see our world. This is what motivates our mission.

So “enough tall claims; show me how that works” you say? No problem. I’ll illustrate using 3 examples, each of them a top-level real world system.

Example 1 — Smart Buildings

The vision for a Smart Building is a structure that’s completely autonomous. There are many functions — Surveillance, Incident response, Security, Access control, Energy systems, HVAC, Maintenance to name a few. The point is to bring autonomy to each, as well as have something that coordinates and orchestrates them into a single whole.

Take one — surveillance for example. You’ll need to model faces of individuals, scale them to a crowd, & model crowd behaviour, and determine intent. This requires layers of vision models. You can of course try doing all of it with a single deep net, and it might work, but you’ll soon be faced with an uphill battle in working changing scenarios into the mix. To do this well, you’ll need Morphometrics, face models, video models of individuals, crowd simulations and behaviour simulations. Learning and Simulation working together.

Say your system detects unauthorized activity in a Smart Building. What then? That would be the domain of Incident Response. To model that, you’ll need to bring together a face recognizer, crowd & behaviour models, a logical representation of the building from the BIM system, rules & regulations represented as a knowledge base and policies/procedures on scenarios and incidents, all into a Logical Reasoner (which acts like a Planner). Learning, Simulation, Knowledge Representations and Reasoning — all working together.

Example 2 — Generative Design

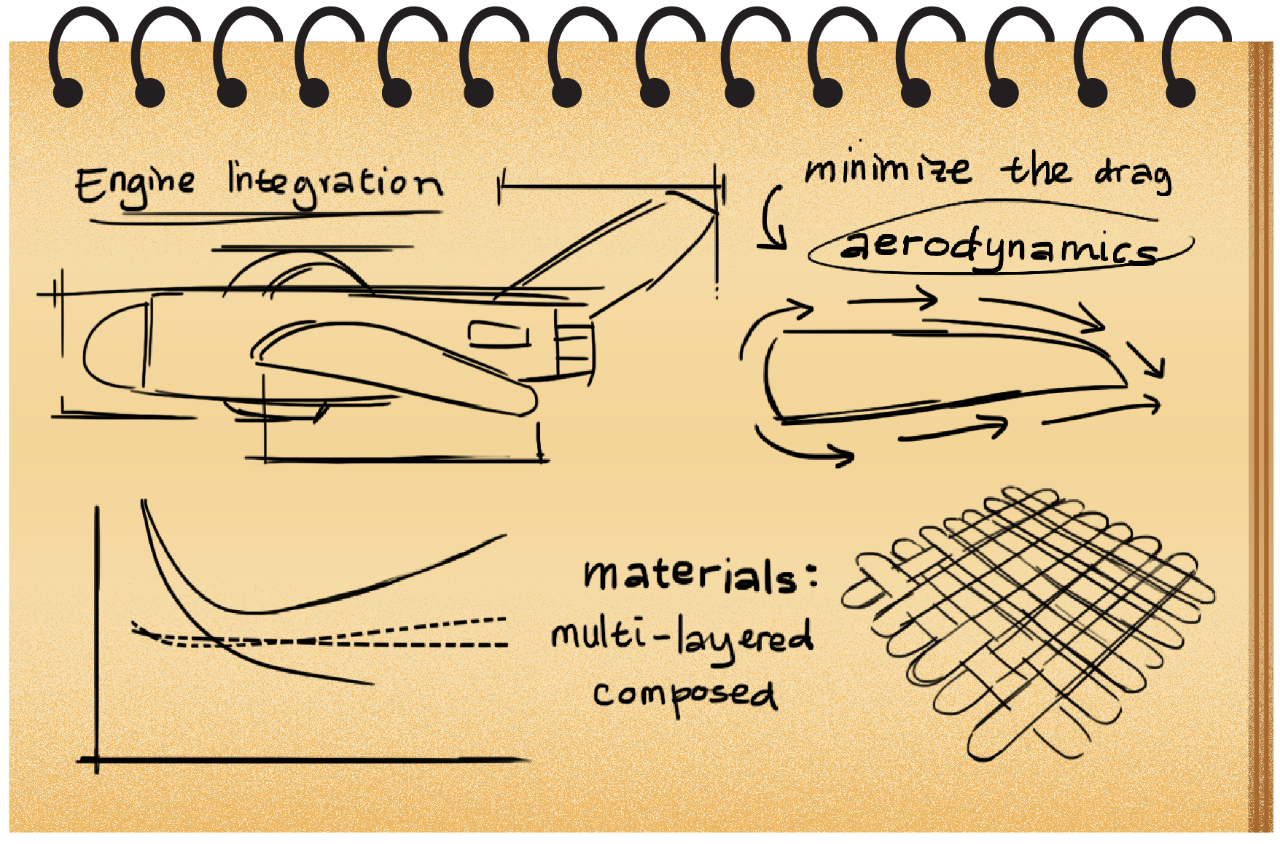

Moving on, let’s consider Aircraft Wing Design. There’s two aspects — shape design & material design. We’ll focus on the former which should ring a bell if you’ve ever watched a wind tunnel scene. The point of Generative Design is to have a human designer working hand in hand with an autonomous system, co-creating and co-designing things. The latter suggests design options & handles details, while the human guides the process.

This is a problem that’s complex in a unique way — the problem space and the solution space are different. The former concerns itself with operating conditions and requirements while the latter deals with wing shape & aerofoil parameters. In order to successfully design a working wing, the optimizer needs to navigate the solution space to find candidates. Its energy value — driven by simulations of wing performance — lives in the problem space. The training process is an iterative one — a) optimize for candidates in the solution space, and b) simulate in the problem space to know their performance.

Problem is that without guidance, simulations entail the entire problem space. Unless you have a way of knowing which simulations to run, you’ll need to do all of them in every cycle. This is very expensive and wasteful. One way to address this is to train a surrogate learner on prior simulation data and results. This way, you may use the learner as a filter to determine candidate simulations and only run those every step.

Optimization, Simulation and Learning — all intimately interlocked in a continuous dance during a single training run. Isn’t that cool?

Example 3 — DRP in Supply Chain

Another problem — Disaster Recovery Planning (DRP) in a Supply Chain. During the recent Covid19 outbreak, the meat industry was in trouble. Because plants were shut-down due to infection, there were no meat shipments to restaurants and store shelves. Meanwhile, millions of meat animals were being slaughtered and disposed of because it was too expensive to keep them. Being designed for hyper-efficiency means no extra capacity to buffer shocks to the system.

An ounce of prevention beats a pound of cure. What companies need to deal with disasters is a plan and process set up beforehand that involves recovery agreements with key players in the entire supply chain.

To effectively support this process, a DRP knowledge base is needed — one that brings together key pieces of information and their inter-relationships from each of the participants. This would be an open-world, and combine structured Supply Chain data along with unstructured data to be up to date with “what’s going on right now” in the supply chain. Because of its open nature, a traditional rules-based application running on top of a relational database — the standard fare for traditional supply chain applications — will just not cut it. You need a knowledge representation — a graph or an ontology — with logical reasoning running on top.

This knowledge base would feed a scenario analyzer — a simulation. For complex supply chains like high tech or automotive, you’d also need an Autonomous Decision Support System — one that can work its way through the current state of affairs, weigh scenarios and suggest options for decision making by a human.

Knowledge representation, Simulation and Logical Reasoning working hand in hand.

- - -

Three very different problems, each solved by not just one, but a mix of methods. To us at Kado, that is amazing! Complexity in real world applications that is not effectively simplified or addressed by using rules. When more than one method needs to be pulled in to work together.

- Learn

- Optimize

- Simulate

- Represent

- Solve

- Deal with Uncertainty

- Compute

6 — Statistics & 7 — Computer Science are whole fields in themselves. We only care about those aspects relevant to 1–5.

This is how we see the world

Machine Learning is King, but of narrow territory. Hero Methods do things that ML cannot. Taken together, not only do they help solve complex problems, they also lay the doorway to AI.

Get in touch

-

Milton, ON L9T 6T2, Canada

-

help@kado.ai

-

+1 416 619 0517

Copyright © 2025

Super!

An email is on it's way.

Super!

An email is on it's way.