Probabilistic Programming — A gentle Intro

Oct 17, 2022 | Jagannath Rajagopal | 3 min read

Probabilistic Programming = Probabilistic Modelling as a Computer Program

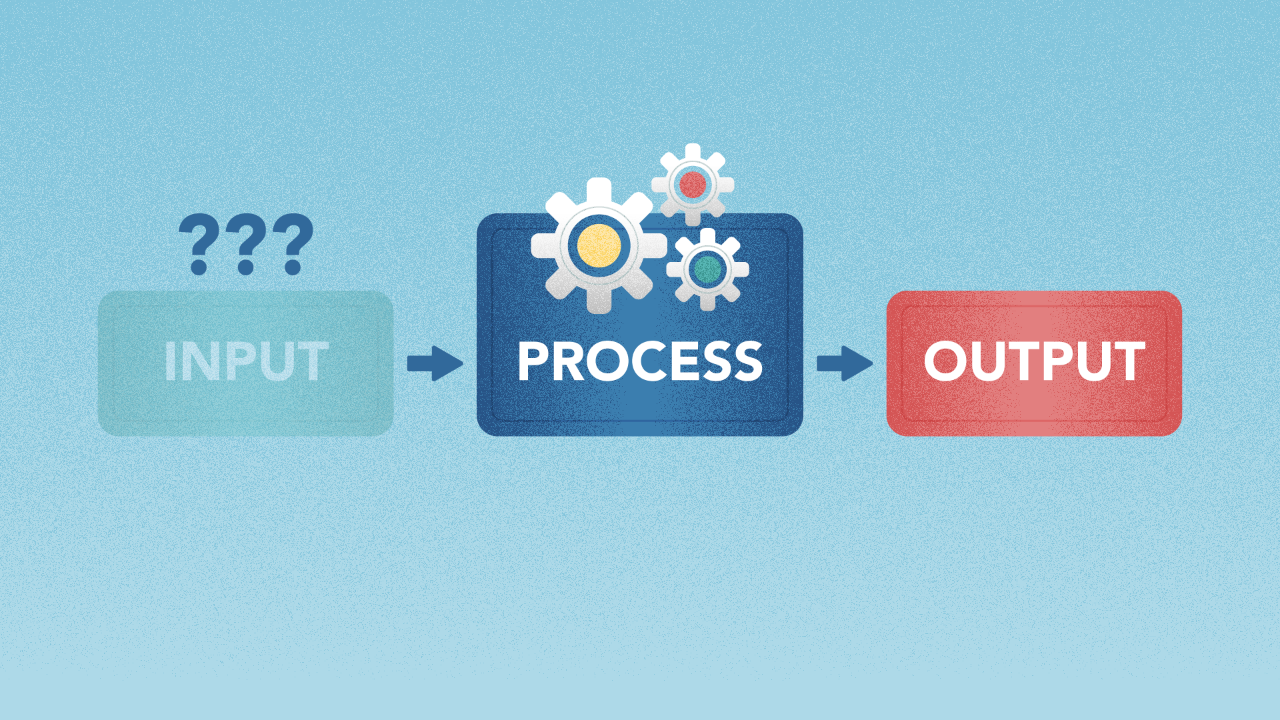

With Machine Learning, you predict an effect from a set of causes. Just with that, we’ve seen a mass proliferation of applications that do amazing things in different domains. Machine learning problems can be modelled with Probabilistic Reasoning. Probabilistic Graphical Models let you represent probabilistic relationships between factors and targets. With this, you can perform reasoning and represent problems in a rich and powerful way.

Probabilistic Programming, a programming paradigm centred on probabilistic reasoning, takes this further. Given an effect, you can forensically analyze its cause or set of causes. You can do this with Machine Learning also, in a limited way. If you know that an outcome has occurred, you can then use a probabilistic model of that problem, to calculate the odds that a given factor contributed to that outcome.

I’ve created a LOT of resources on topics like these. Here’s my course on Design Thinking for Hero Methods. Here’s my YouTube channel with previews of course videos. Here’s my website; navigate through the courses to find free previews & pdfs.

- - -

These assume you already have a model that is ready and available for inference. As in Machine Learning, you can handcraft probabilistic models. Depending on the situation, it may be easier to do so. However, the power of either method is revealed when you have data about the world. Probabilistic models, and programs may be trained with data to accurately capture the odds of choices at different steps.

As new knowledge comes in, the underlying probabilistic model may be enhanced on the fly — online. This too is a goal of Machine Learning but we are still a ways away. With the ability to learn as you go, we gain the ability to improve knowledge of a domain in a flexible manner.

Execution is a fundamental concept in Programming. You run a program to get your output. A probabilistic program does this as well. The difference is that a probabilistic program can have different execution paths, to produce different outputs. This is based on probabilistic choices made in different steps in the program. Each choice can have different outcomes, with its odds being captured in the program. One or more outcomes can serve as input to a subsequent choice, with multiple outcomes & odds. The underlying model hence may be viewed as a tree or a graph. So you can think of a probabilistic program, as a probabilistic graphical model being represented in a computer program.

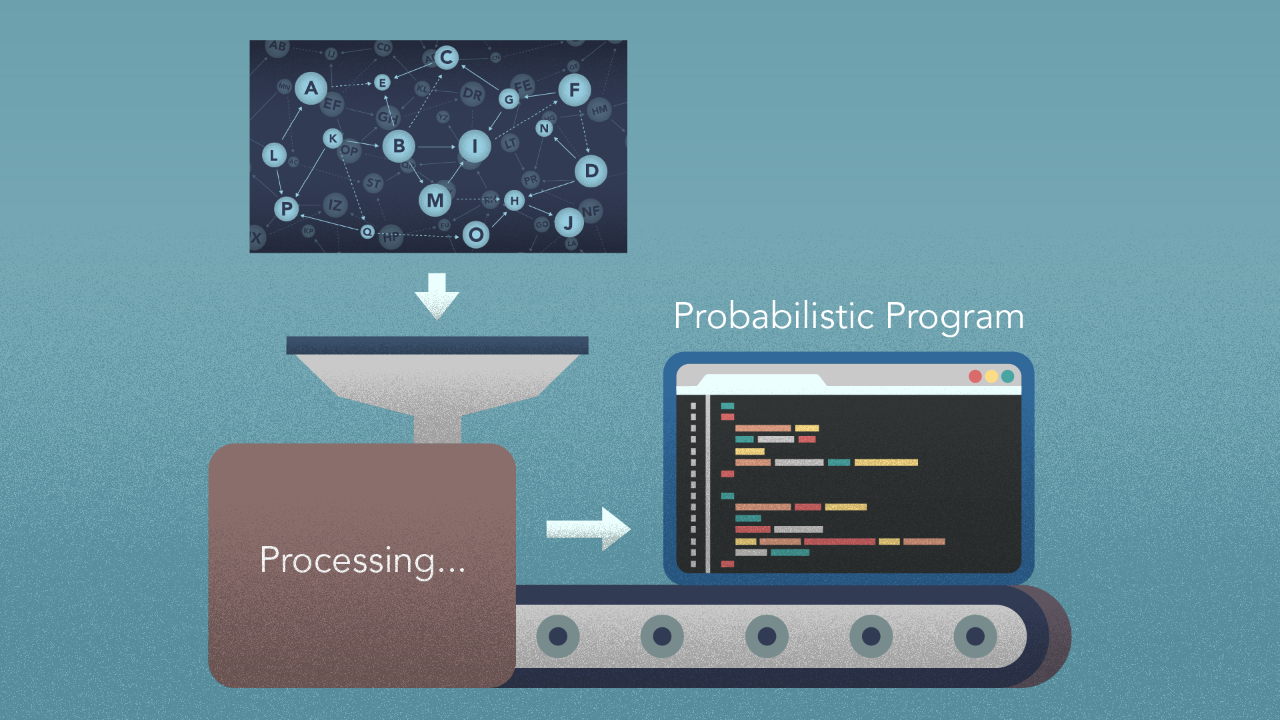

Unlike Machine Learning, the only operations you have in Probabilistic Programming are those in the core concepts of probability.

Probabilistic Graphical Models are great at mitigating the black box problem prevalent in Machine Learning. You can easily trace the link between cause and effect. Given a program, and a specific outcome, you can trace back the odds that a given factor influenced it. These make probabilistic programs explainable. You can look under the hood to understand what went on. This goes a long way in building trust in the model.

However, that very thing is a limitation — the rich and complex representations that machine learning models are known for are not available to Probabilistic models. In Machine Learning, there is a large variety of choice when it comes to choosing operations, or activation in ML jargon. This is because the only operation — or activation in ML parlance — in Probabilistic Programming is the Bayes’ theorem.

- - -

At Kado, we ponder different ways to learn from data. Probabilistic Graphical Models and Probabilistic Programming are great ways to do that. They solve a fundamental problem in Hero Methods — explainability, but in doing so, sacrifice representation power.

This is how we see the world

Machine Learning is King, but of narrow territory. Hero Methods do things that ML cannot. Taken together, not only do they help solve complex problems, they also lay the doorway to AI.

Get in touch

-

Milton, ON L9T 6T2, Canada

-

help@kado.ai

-

+1 416 619 0517

Copyright © 2026

Super!

An email is on it's way.

Super!

An email is on it's way.