Marrying Neural Nets with Symbolic AI: Neurosymbolic models

Oct 17, 2022 | Jagannath Rajagopal | 5 min read

A few years back, nVidia made an announcement that a computer beat a human for the first time at the task of image recognition. The “machine” was a Convolutional Neural Network, while the human was Andrej Karpathy who wrote what was the best article on how they work, at the time.

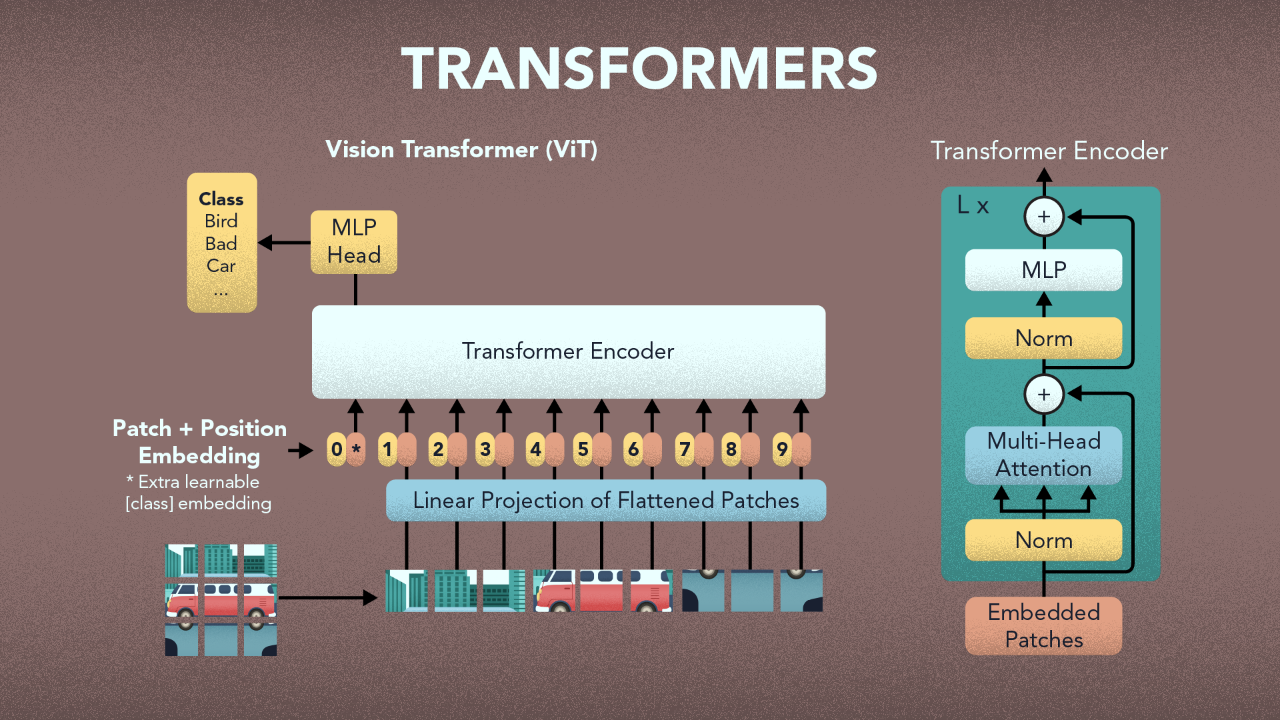

With ImageNet, GPUs, Tensorflow/PyTorch, Convolutional Nets, Attention and now Transformers, state of the art Image Recognition uses model architectures that are Deep Learning based. It is rumoured that these production versions of these models are so large that it would take a tech giant a couple of millions dollars in computing resources for a single training run. All of that is for not for naught, since they crunch through billions of images and do well for problems that are large scale.

State of the art image to text models can create compellingly accurate descriptions of the content in images. This is a necessary step in order for us to get anywhere with machine perception.

For all this, Deep Learning methods have some fundamental weaknesses which progress thus far has not addressed. The first thing that would come to mind for anyone with even the most basic knowledge of Machine Learning is overfitting. If you have a bit more experience, you would instead mention the problem of generalization.

I’ve created a LOT of resources on topics like these. Here’s my course on Design Thinking for Hero Methods. Here’s my YouTube channel with previews of course videos. Here’s my website; navigate through the courses to find free previews & pdfs.

- - -

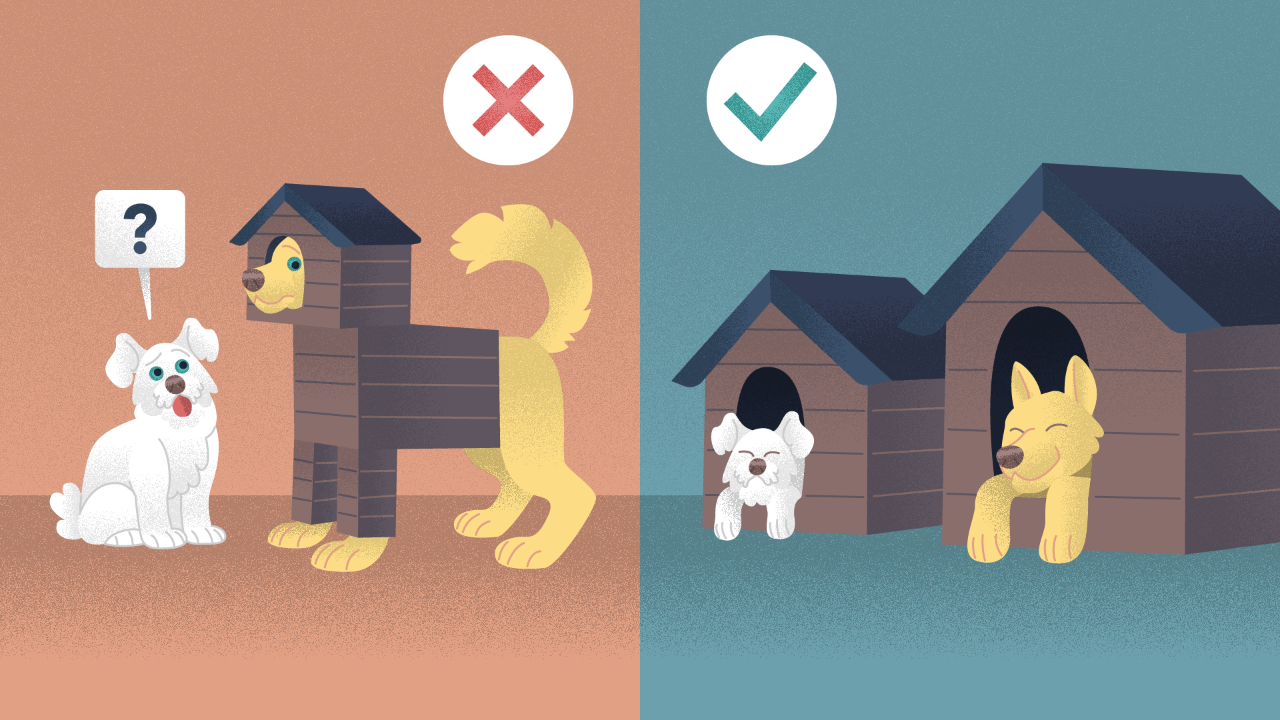

If the data is “off-sample”, which is a fancy term data scientists use to describe the scenario where what the model encounters differs from what it trained on. A person born in South India in the 1970s — I am talking about myself — would still recognize a Golden Delicious as an apple the first time he sees one. If a deep learning model is only trained on the red kind, I highly doubt it would generalize well to green or golden.

In a recent MIT video, David Cox outlines some problems with image models. He had his team put a colorful guitar in front of a monkey in a forest. The model was then fooled into thinking the monkey was a person. The saving grace is that the model was only 43% sure of this. One could anthropomorphize this away as the model at least knowing something what it was seeing and could “tell” there is something off. There is a problem with this statement — we make it all the time when discussing models at work — the closer we get to true AI, the less anthropomorphizing one should do…ok lets move on.

A similar test involved a bicycle instead of the guitar, with the same result.

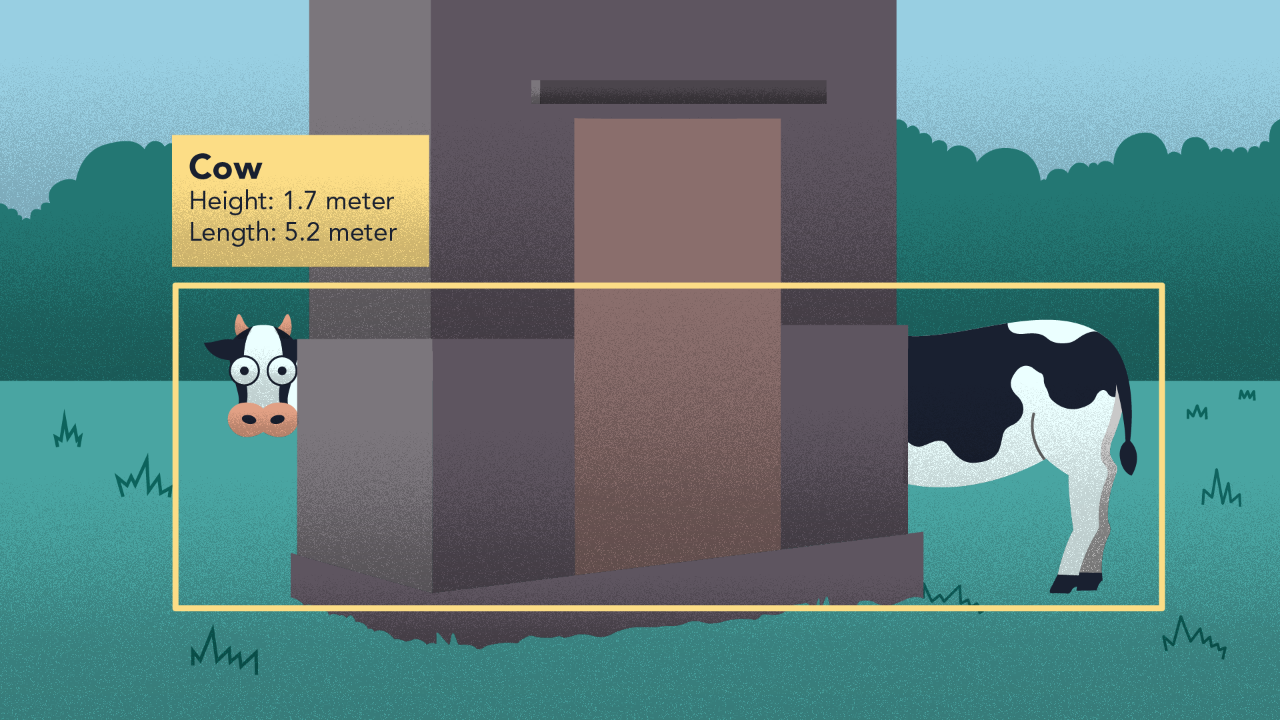

I recently saw an image floating around on the internet of the world’s longest cow. On the one hand, I think its actually amazing that the model picked up the inter-relationship. On the other hand, even a child would know there is something that’s off about the picture. Or if the child was anything like what I was like as one, it would first giggle for 5 minutes straight!

When a person sees an image, the mind automatically performs a lot of reasoning along with it. As such, we do not possess the ability to turn off brain functions like that on demand. If there is an upside down cat, or a car that’s on fire, or dollar bills stuffed inside a mattress, we would know it when we see it. But it’s not always clear that state of the art models can, if their training data does not contain enough samples to elicit said patterns.

Even when patterns are elicited, they could sometimes be mashed together inside the weights, biases and activations of the hidden layers. As such we lose atomic control over such operations.

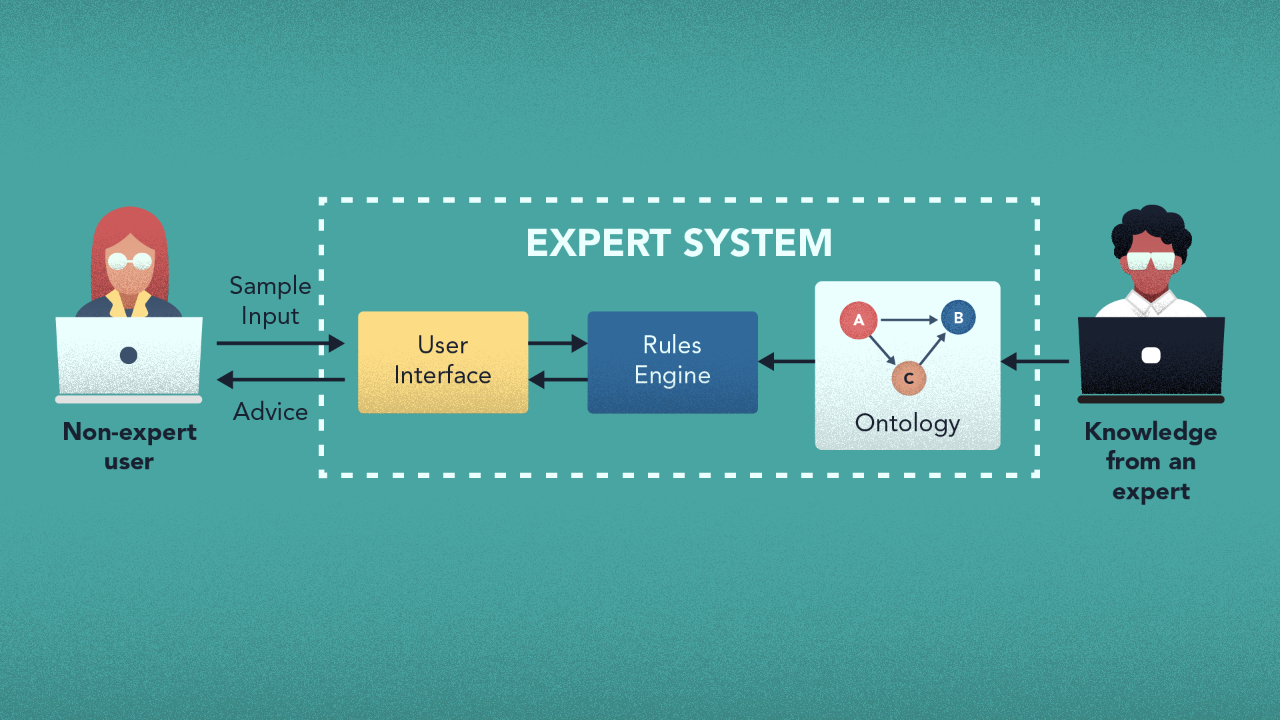

There is a large set of tasks that Deep Learning does not do well on its own. But many of the above may be solved with another traditional paradigm — symbolic AI. Its strengths lie in formal and explicit knowledge representations. For an apple, you could describe its properties as a graph or a tree — or another suitable structured form. As long as there is structure, there is meaning which allows for logical reasoning to be performed.

Symbolic AI aka GOFAI (Good Old-Fashioned AI) formed the basis of what was popular in the 1970s — expert systems. These quickly died out because the particular manifestation at the time was riddled with issues. Doug Lenat in an overview of Cyc mentions an estimate for the number — about 130; fundamental ones that caused Expert Systems to fail to scale. Nevertheless, the field is guilty of confusing the manifestation for the method — it’s expert systems that are troublesome and not necessarily Symbolic AI.

As recent as 2019, there has been a resurgence of interest in Symbolic AI, particularly as a way to mitigate this problem of Deep Learning. Since neural nets are not very good at composing and learning meaning, Symbolic AI is seen as a way to assist with that. The two — Deep Learning & Symbolic AI — would work hand in hand to create the family of models after whom this article is named.

Neurosymbolic models offer promise in improving the capabilities and performance of Deep Learning. They would also assist Symbolic AI with one of its weaknesses — learning and dealing with large datasets.

At Kado, we are optimistic about Neurosymbolics. To start, you can see these are loosely integrated, where the output of the neural net feeds the symbolic model. To gain more out of the neural net though, the training process of the net should ideally be influenced by the outcome of logical reasoning on the symbolic side.

- - -

Sources -

World’s longest cow: Not sure! I found it on a LinkedIn post.

This is how we see the world

Machine Learning is King, but of narrow territory. Hero Methods do things that ML cannot. Taken together, not only do they help solve complex problems, they also lay the doorway to AI.

Get in touch

-

Milton, ON L9T 6T2, Canada

-

help@kado.ai

-

+1 416 619 0517

Copyright © 2026

Super!

An email is on it's way.

Super!

An email is on it's way.