Big Leaps First: What to do when your ML model fails

Oct 17, 2022 | Jagannath Rajagopal | 3 min read

When your current ML architecture does not work, what next?

My team and I have been working on a complex problem for 3 years now. There’s high levels of uncertainty in the data and telling the signal from noise is hard. The environment is constantly changing as well which adds a layer of complexity.

We started with a really big idea — using a model to make a model adapt to a changing world. Our hypothesis was that we need to tackle adaptability. So we had a two model architecture. The first model would tackle the problem directly. The second model would tweak the first model and make it adapt to its environment. Out of the myriad research available, we picked suitable algorithms for each model, got it working and tweaked several times.

Failed.

We switched — to an approach that is based on customizing standard off the shelf components. The first step would optimize the component’s parameters and then use it to train a machine learning model that would act as a filter for its output. We stopped worrying about adaptability with an approach of frequent training as data comes in.

Failed.

With the second, we beat that horse so hard even after it died. 2000 ways to not make a lightbulb? No — it’s 300 ways to not build a model. Literally. We stayed with the approach way long after it was starting to become clear that it was not working.

In our third major iteration, we changed the hypothesis once again. This time, we split the task into two functionally. Each part would then train a machine learning model, and one would feed the other. Initially, it was working, then there was work to be done, and then it stopped working altogether.

Failed.

I’ve created a LOT of resources on topics like these. Here’s my course on Design Thinking for Hero Methods. Here’s my YouTube channel with previews of course videos. Here’s my website; navigate through the courses to find free previews & pdfs.

- - -

Our problem is in a very difficult data domain. But, there are tons of approaches to try and we’re getting to our end goal progressively.

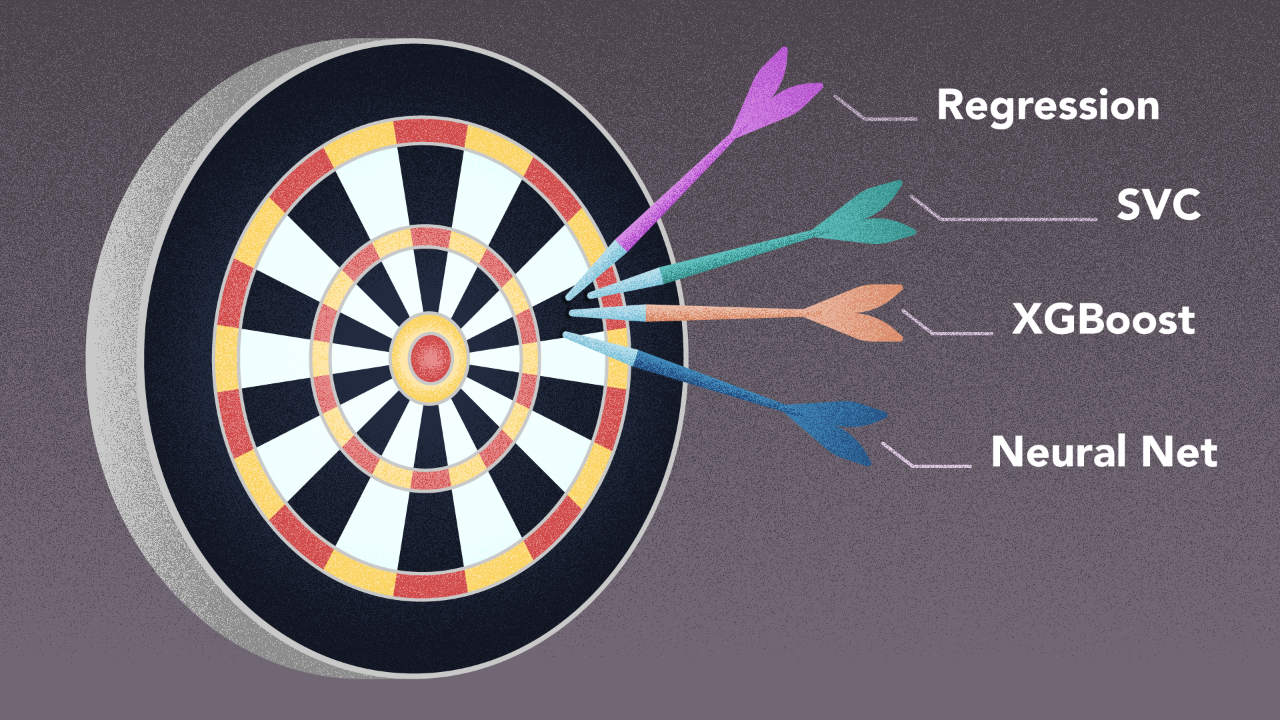

The lesson here is that with bread and butter learning methods — the usual suspects — like regression, decision trees, XGBoost, Neural nets, what you’ll usually find is that the results more or less fall in a spectrum around an answer. After all, they are all trying to find the same minimums (or maximums) that characterizes the solution.

If that answer is far from where you want it to be, resist the urge to tweak. A few tries is good enough.

At that point, you need to make a jump. Leaps, not skips. Change something significant. A good place to start is the underlying assumption or hypothesis. Like, in this one specific instance — “stitching together standard components will work”.

At Kado, it’s our job to think about how to approach complex problems in AI. You are never going to make a Machine learning model work off the get-go. Or any other model in AI. So, when you fail, which you most likely will, it is necessary to have a notion for how you are going to go about conducting your experiments.

This is how we see the world

Machine Learning is King, but of narrow territory. Hero Methods do things that ML cannot. Taken together, not only do they help solve complex problems, they also lay the doorway to AI.

Get in touch

-

Milton, ON L9T 6T2, Canada

-

help@kado.ai

-

+1 416 619 0517

Copyright © 2026

Super!

An email is on it's way.

Super!

An email is on it's way.